What Is Bagging In Machine Learning

Di: Everly

Ensemble Methods: Bagging and Boosting

Ensemble learning has revolutionized the way one approaches an ML problem. This article revolves around bagging, an ensemble learning technique. What is Bagging in Machine

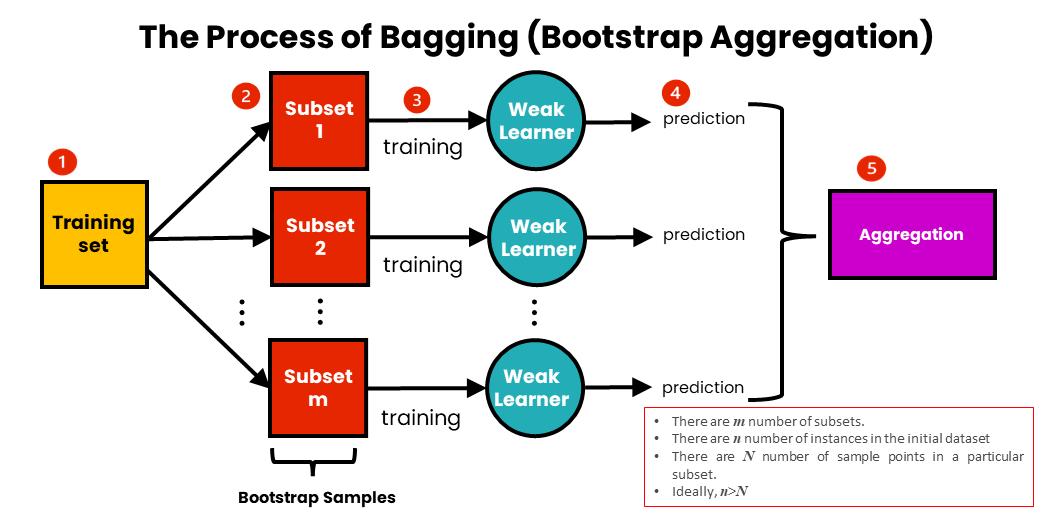

Bagging Flow Chart. The following steps are involved in implementing bagging in machine learning: Select a Base Model: Select a base model that is known to perform well on a particular task. The

Unlock the power of mathematics in machine learning. Enroll in Maths for Machine Learning Course today! Conclusion. The key takeaways from this article are:-Ensemble learning helps in

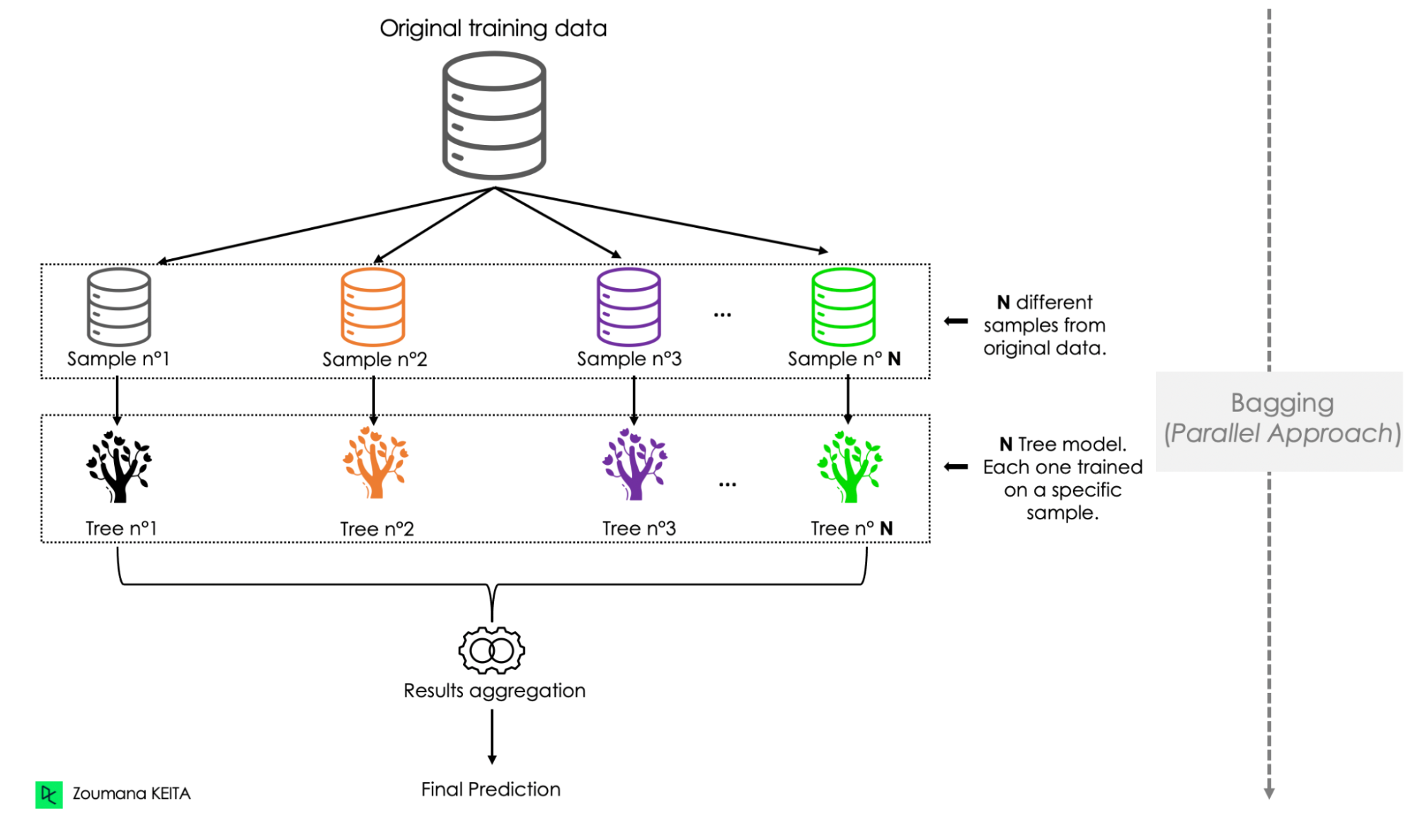

Bagging is a well-known method to generate a variety of predictors and then straightforwardly combine them. The term “bagging” comes from Bootstrap Aggregating. In this case, we employ the same learning

Bagging and Boosting have become fundamental techniques in machine learning. While Bagging offers robustness and simplicity through parallel learning, Boosting provides

When it comes to bagging and boosting in machine learning, the implementation of the former technique is done in the following ways: Step 1: Let’s say that a training set

- What Is Bagging In Machine Learning?

- Bagging and Pasting: Ensemble Learning using Scikit-Learn

- Bagging vs Boosting in Machine Learning

Ensemble Machine Learning (EML) techniques have markedly advanced in hydrological modeling over the past decade, significantly enhancing predictive accuracy for

Bagging, short for Bootstrap Aggregating, is an ensemble learning technique that improves the stability and accuracy of machine learning models by reducing overfitting and

Bagging is an ensemble method that reduces variance and improves accuracy by training multiple models on random subsets of data and averaging their predictions. Learn how

In the realm of machine learning, where innovation knows no bounds, Bagging stands out as a formidable technique. This ensemble learning method has revolutionized

An Introduction to Bagging in Machine Learning by Zach Bobbitt Posted on November 23, 2020 November 24, 2020 When the relationship between a set of predictor

Bagging is an ensemble method that reduces variance and improves model stability by training multiple base models on different data subsets. Learn how bagging works, its applications, and how to implement it in

- Guide to Ensemble Learning

- Bagging, Boosting & Stacking Made Simple [3 How To Tutorials]

- Difference Between Bagging and Boosting

- What is Bagging in Machine Learning? A Guide With Examples

- Evolution of ensemble machine learning approaches in water

Introduction to Bagging. Bagging, an abbreviation for Bootstrap Aggregating, is a robust ensemble learning technique that seeks to minimize overfitting in machine learning

Bagging and Boosting are essential ensemble techniques in machine learning that enhance model performance by combining multiple models. Bagging reduces variance by training models independently on bootstrapped

Boosting in Machine Learning. Boosting is a powerful ensemble learning method in machine learning, specifically designed to improve the accuracy of predictive models by

What is Bagging in Machine Learning? Bagging is also called Bootstrap Aggregation and is an ensemble learning method that helps improve the performance and

Yes, bagging and boosting can be applied to various machine learning algorithms, including decision trees, neural networks, and support vector machines. However, decision

Bagging, also known as bootstrap aggregation, is an ensemble learning technique that combines the benefits of bootstrapping and aggregation to yield a stable model and improve the prediction performance of a machine

Advantages of Bagging in Machine Learning. Bagging minimizes the overfitting of data; It improves the model’s accuracy; It deals with higher dimensional data efficiently;

In machine learning bagging is a short for bootstrap aggregating and it is a powerful ensemble learning technique in machine learning designed to improve the stability

Bagging is an ensemble learning technique that reduces variance and overfitting by training multiple weak models on bootstrapped datasets and aggregating their predictions. Learn how bagging works, why to use it, and see

Ensemble learning techniques are quite popular in machine learning. These techniques work by training multiple models and combining their results to get the best

Bagging is a specific ensembling technique that is used to reduce the variance of a predictive model. Here, I’m talking about variance in the machine learning sense – i.e., how

Essence of Bagging Ensembles. The essence of bagging is about leveraging independent models. In this way, it might be the closest realization of the “wisdom of the

Machine Learning (CS771A) Ensemble Methods: Bagging and Boosting 3. Ensembles: Another Approach Instead of training di erent models on same data, trainsame modelmultiple times

Bagging is a method of combining multiple machine learning models to achieve better accuracy and reliability in predictions. Bagging is widely used in various domains, such

The ensemble technique relies on the idea that aggregation of many classifiers and regressors will lead to a better prediction [].In this chapter, we will introduce the ensemble

Q1. What is bagging and boosting in machine learning? A. Bagging and boosting are ensemble learning techniques in machine learning. Bagging trains multiple models on

Source: Oreilly ‘s Hands-On machine learning with Scikit-learn, Keras & Tensor flow. The most popular Ensemble methods are: Bagging (Bootstrap Aggregation)

Hey there, data enthusiasts! Today, we’re going to dive into a crucial concept in machine learning called Bagging—an ensemble learning technique that can significantly improve the accuracy

The success of bagging led to the development of other ensemble techniques such as boosting, stacking, and many others. Today, these developments are an important part of machine

- Silentsleep Silent Sleep Training Einschlafhilfe

- Vamos Klingel Hersteller: Vamos Klingel Verkauf

- Präzisions-Gießformen Für Suppositorien

- Emmerich: Katholische Kitas Fortan Mit Neuer Verbundleitung

- Stream Lauren Ruth Ward Music

- Polizei Ennepe-Ruhr-Kreis, Polizeiwache Ennepetal

- Argentinien Naturwunder – Argentinien Vegetation

- Die Schönsten Rundwanderungen Im Siegtal: 8 Tolle Touren Zwischen

- Huf Schlüssel Verkauft – Autoschlüssel Hersteller

- Shz Softwarehaus Zuleger Als Arbeitgeber: Sehr Schöne Zeit In

- Floating Sandbox Ships Download