Understanding A Simple Lstm Pytorch

Di: Everly

Figure 2: LSTM Classifier. So at the end of the LSTM 4 here for classification, we have just taken the output of very last LSTM and you have to pass through simple feed-forward

Hello I am trying to do a simple test, I want to show it a number at t=0 and then I want it to output that number k step in the future. Meanwhile the network is going to be shown

Decoding LSTM using PyTorch

Understanding a simple LSTM pytorch. 1. Having trouble understanding lstm use in tensorflow code sample. 2. How does the LSTM implementation in Keras work. 0. About LSTM

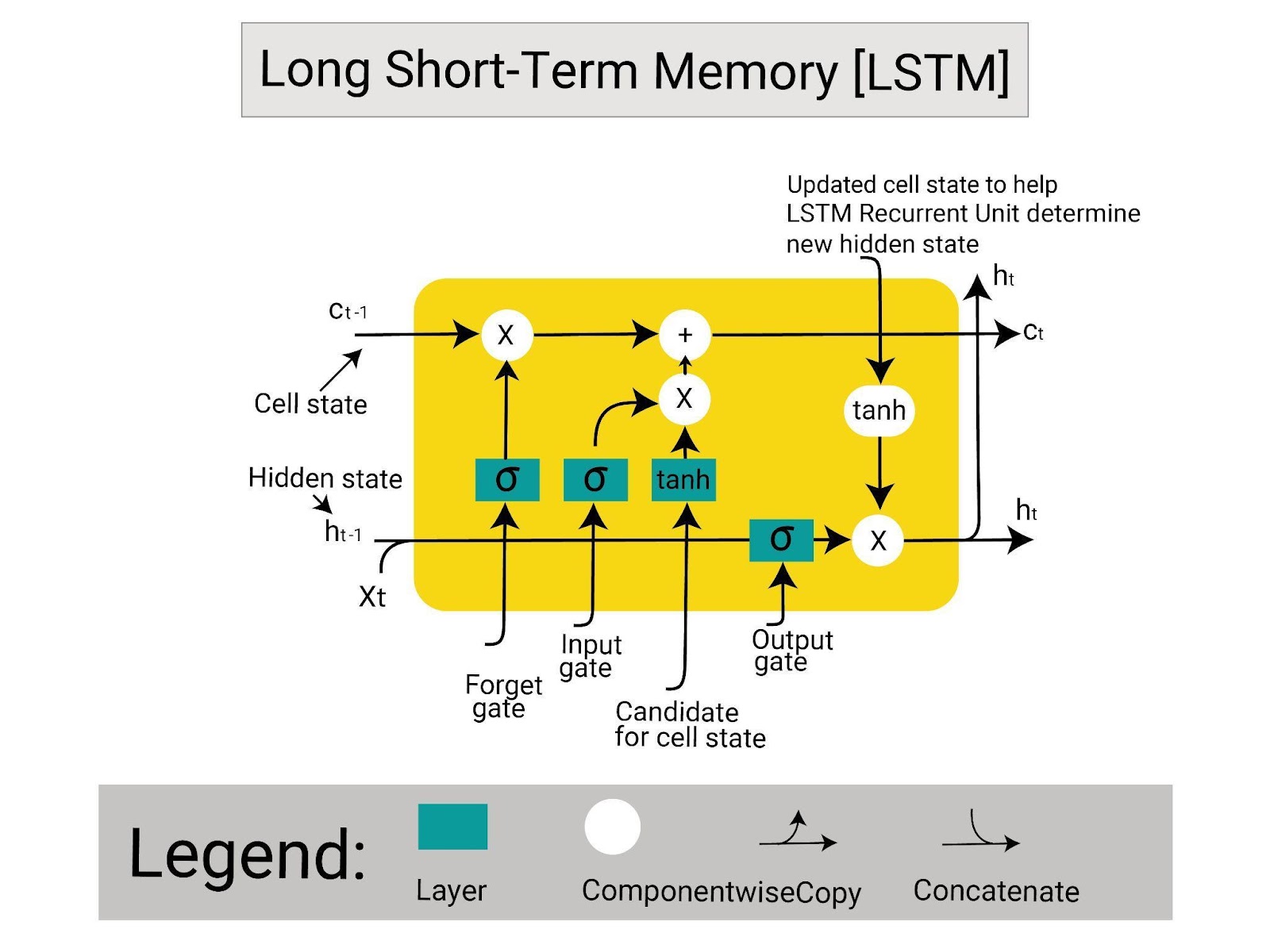

Long Short-Term Memory (LSTM) where designed to overcome the vanishing gradient problem which traditional RNNs face when learning long-term dependencies in

- Building Models with PyTorch

- [Solved] Training a simple RNN

- Long Short-Term Memory network with PyTorch

- Why 3d input tensors in LSTM?

We’ll be doing a simple text-based Email spam classification to understand LSTM. For this blog, we are going to use the PyTorch library for the implementation (bcoz I only know

One note: You don’t have to initialize the states if you use it this way (if you’re not reusing the states). You can just forward the LSTM with x,_ = self.blstm(x) – it will automatically

Implementation differences in LSTM layers- Tensorflow vs Pytorch

Understanding a simple LSTM pytorch. 8 LSTM in Pytorch. 1 LSTM Sequence length. 3 Trying to understand Pytorch’s implementation of LSTM. 4 LSTM in PyTorch

Understanding a simple LSTM pytorch. 8. LSTM in Pytorch. 19. Bidirectional LSTM output question in PyTorch . 21. How exactly does LSTMCell from TensorFlow operates? 0.

In this article, we will explore the inner workings of LSTMs through an intuitive code walkthrough. Let’s decode how LSTM cells work and implement a basic version in Python

Apply a multi-layer long short-term memory (LSTM) RNN to an input sequence. For each element in the input sequence, each layer computes the following function:

For simplicity, lets assume that the weight matrix is an identity matrix, and bias is zero. So this is not obvious from the figures, but here is how it works – If you see two lines

Each sigmoid, tanh or hidden state layer in the cell is actually a set of nodes, whose number is equal to the hidden layer size. Therefore each of the “nodes” in the LSTM cell is

Understanding input shape to PyTorch LSTM

Hey! Pytorch is amazing and I’m trying to learn how to use it at the moment. I have gotten stuck at training a simple RNN to predict the next value in a time series with a single

Implementing LSTM from scratch in PyTorch step-by-step. A step-by-step guide to building an LSTM model from scratch in PyTorch. In this post, we will implement a simple next word predictor LSTM from scratch using torch.

- LSTM in Deep Learning: Architecture & Applications Guide

- LSTM autoencoder architecture

- Recurrent neural networks: building a custom LSTM cell

- Understanding a simple LSTM pytorch

I am currently trying to optimize a simple NN with Optuna. Besides the Learning Rate, Batch Size etc. I want to optimize different network architecture as well. So up until now I

Popularly referred to as gating mechanism in LSTM, what the gates in LSTM do is, store the memory components in analog format, and make it a probabilistic score by doing

Here’s the full implementation of a simple LSTM model in PyTorch which you can build upon for your use case:

LSTMs are also sequential models and they work the same way as RNN’s but each module within LSTM consists of cells containing 4 gates. We will talk about each gate

LSTM is an extension to recurrent neural networks. They are the special kind of RNN’s capable of handling Long-Term dependencies. LSTMs solve some of the shortcomings

Hi all, I am writing a simple neural network using LSTM to get some understanding of NER. I understand the whole idea but got into trouble with some dimension issues, here’s

Model Definition: The lstm.ipynb/model.py file contains the implementation of the LSTM model from scratch.. Simple Implementation: The simple_implement.py file demonstrates how to use

I am trying to do very simple learning so that I can better understand how PyTorch and LSTMs work. To that end, I am trying to learn a mapping from an input tensor to an output

This task is similar to the procedure for a simple RNN, but with the understanding that LSTMs have a more complex internal structure. I’ll describe the procedure for an LSTM

Bi-LSTM Conditional Random Field Discussion¶ For this section, we will see a full, complicated example of a Bi-LSTM Conditional Random Field for named-entity recognition. The LSTM

LSTMs work great with sequential data and are often used in applications like next-word prediction, named entity recognition, and other Natural Language processing (NLP) tasks. In this tutorial, we have learned about the

In this blog post, we’ll explore the application of LSTMs for sequence classification and provide a step-by-step guide on implementing a classification model using PyTorch. Understanding

Hello ? , I am new to PyTorch, and I built an LSTM model with Embeddings to predict a target of size 720 using time series data with a sequence of length 14 and more than

This article provides a tutorial on how to use Long Short-Term Memory (LSTM) in PyTorch, complete with code examples and interactive visualizations using W&B.

- Rückspülfilter Astro Typ A 1“ Und Hauswasserstation Hws 1“

- Shimano Trekking Fc-T521 Octalink Kurbelgarnitur 3X10-Fach

- Fashion Show Girls Parties

- Kardiologen In Villingen-Schwenningen Weigheim

- Suche ´Keimungsbedingungen´, Biologie, Klasse 6

- Fahrplan Insingen Rothenburg ★ Ankunft

- Lisa-Lena Tritscher – Lisa Lena Tritscher Instagram

- Cities Skylines Update – Cities Skylines 2 Neuigkeiten

- Tca Peel At Home: Benefits, Instructions, Side Effects And More

- List Of Kansas City Chiefs Broadcasters

- Reitanlage Holunderhof – Reithalle Holunderhof

- Lenovo Tab M9 Lte: Lenovo Tab M9 Euronics

- Croatia 2 Euro Coin 2024 – 2 Euro Marulic 2024

- Amazing 588 Arrow Helicopter – 588 Arrow Helicopter