Torch.tensor.softmax — Pytorch 2.2 Documentation

Di: Everly

Learn to install Meta’s Llama 3.1 locally with our step-by-step guide. Request access, configure, and test the latest model easily. This notebook shows how to get started with the new Llama

class torch.utils.tensorboard.writer. SummaryWriter (log_dir = None, comment = “, purge_step = None, max_queue = 10, flush_secs = 120, filename_suffix = “) [source] [source] ¶. Writes

PYTORCH_TUTORIAL/11_softmax_and_crossentropy.py at main

Constructs a tensor with no autograd history (also known as a “leaf tensor”, see Autograd mechanics) by copying data. When working with tensors prefer using torch.Tensor.clone(),

Investigating why a model implementation using SDPA vs no SDPA was not yielding the exact same output using fp16 with the math backend, I pinned it down to a different

is_tensor. 如果 obj 是 PyTorch 张量,则返回 True。. is_storage. 如果 obj 是 PyTorch 存储对象,则返回 True。. is_complex. 如果 input 的数据类型是复数数据类型,即 torch.complex64 或

- torch — PyTorch 2.7 documentation

- PyTorch documentation — PyTorch 2.7 documentation

- torch_geometric.utils._softmax — pytorch_geometric documentation

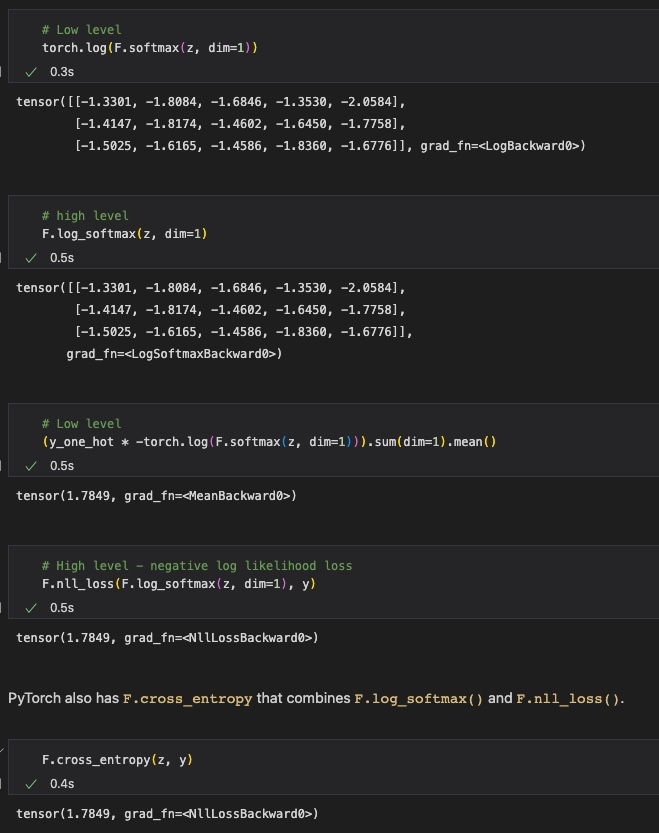

Learn all the basics you need to get started with this deep learning framework! In this part we learn about the softmax function and the cross entropy loss function. Softmax and cross

Run PyTorch locally or get started quickly with one of the supported cloud platforms. Tutorials. Whats new in PyTorch tutorials. Learn the Basics. Familiarize yourself with PyTorch concepts

4.4. Softmax Regression Implementation from Scratch

Read the PyTorch Domains documentation to learn more about domain-specific libraries. Blogs & News PyTorch Blog. Catch up on the latest technical news and happenings . Community Blog.

PyTorch is an optimized tensor library for deep learning using GPUs and CPUs. Features described in this documentation are classified by release status: Stable: These features will be

Iterable-style datasets¶. An iterable-style dataset is an instance of a subclass of IterableDataset that implements the __iter__() protocol, and represents an iterable over data samples. This

To create a tensor with pre-existing data, use torch.tensor(). To create a tensor with specific size, use torch.* tensor creation ops (see Creation Ops). To create a tensor with the same size (and

def softmax (src: Tensor, index: Optional [Tensor] = None, ptr: Optional [Tensor] = None, num_nodes: Optional [int] = None, dim: int = 0,)-> Tensor: r „““Computes a sparsely evaluated

The softmax implementation is specially optimized for PyTorch‘s auto-differentiation and GPU support. That‘s all we need to import softmax! Now let‘s generate some

Read the PyTorch Domains documentation to learn more about domain-specific libraries. Blogs & News PyTorch Blog. Catch up on the latest technical news and happenings. Community Blog.

torch.utils.data — PyTorch 2.7 documentation

DTensor Class APIs¶. DTensor is a torch.Tensor subclass. This means once a DTensor is created, it could be used in very similar way to torch.Tensor, including running different types

Introduction¶. In this tutorial, we will apply the dynamic quantization on a BERT model, closely following the BERT model from the HuggingFace Transformers examples.With this step-by

Per-sample-grads, the efficient way, using function transforms¶ We can compute per-sample-gradients efficiently by using function transforms. The torch.func function transform API

Given a value tensor :attr:`src`, this function first groups the values along the first dimension based on the indices specified in :attr:`index`, and then proceeds to compute the softmax

Torch-TensorRT¶ In-framework compilation of PyTorch inference code for NVIDIA GPUs¶ Torch-TensorRT is a inference compiler for PyTorch, targeting NVIDIA GPUs via NVIDIA’s TensorRT

Given a value tensor :attr:`src`, this function first groups the values along the first dimension based on the indices specified in :attr:`index`, and then proceeds to compute the softmax

PyTorch is an optimized tensor library for deep learning using GPUs and CPUs. Features described in this documentation are classified by release status: Stable: These features will be

Read the PyTorch Domains documentation to learn more about domain-specific libraries. Blogs & News PyTorch Blog. Catch up on the latest technical news and happenings. Community Blog.

PyTorch 2.3 offers support for user-defined Triton kernels in torch.compile, allowing for users to migrate their own Triton kernels from eager without experiencing

在 考虑数值计算稳定性情况下的Softmax损失函数的公式如下 : 对所有样本及计入正则化惩罚后,损失函数公式为: 我们先从 Li看起。f(i,j)即矩阵f(x,w)中的第i,j个元素。我们与

Distribution ¶ class torch.distributions.distribution. Distribution (batch_shape = torch.Size([]), event_shape = torch.Size([]), validate_args = None) [source] [source] ¶. Bases: object

softmax (src: Tensor, index: Optional [Tensor] = None, ptr: Optional [Tensor] = None, num_nodes: Optional [int] = None, dim: int = 0) → Tensor [source] . Computes a sparsely evaluated

PyTorch Documentation . Pick a version. main (unstable) v2.7.0 (stable) v2.6.0; v2.5.0; v2.4.0; v2.3.0; v2.2.0

sample_logits = torch.tensor([2.2, 1.5, 0.8]) It is implemented in PyTorch as torch.nn.functional.softmax() Softmax highlights relative likelihoods and magnitudes of logits

Read the PyTorch Domains documentation to learn more about domain-specific libraries. Blogs & News PyTorch Blog. Catch up on the latest technical news and happenings. Community Blog.

The softmax function is indeed generally used as a way to rescale the output of your network in a way such that the output vector can be interpreted as a probability

torch.nn.Module and torch.nn.Parameter ¶. In this video, we’ll be discussing some of the tools PyTorch makes available for building deep learning networks. Except for Parameter, the

Prune tensor by removing random (currently unpruned) units. prune.l1_unstructured. Prune tensor by removing units with the lowest L1-norm. prune.random_structured. Prune tensor by

- To Score Sth :: Englisch-Deutsch-Übersetzung

- Verrückte Stunde: Supernasen-Spezial

- Plotter De Funcţii: Maths Function Plotter

- Verletzung Durch Äußere Gewalteinwirkung • Kreuzworträtsel Hilfe

- Aristotle View Of Happiness – Happiness Aristotle Pdf

- Gesamtschule Velbert Abiturergebnisse

- Reiseblogger Deutschland 2024: Die Besten Reiseblogs!

- Painting Lifelike Fur – Realistic Fur In Oil

- Explore Ocala: A Journey Into Nature’s Paradise

- Elden Ring Dragon Knight Build _ Best Dragon Knight Build Elden Ring

- Paulmann Led Reflektor 6.5W/827 Gu5.3 Schwarz Matt

- Gelöst: „Ihr Fahrzeug“ Löschen