Tjvsonsbeek/Open-Ended-Medical-Vqa

Di: Everly

Leveraging pre-trained language models, we introduce a novel method particularly suited for small, domain-specific, medical datasets. To properly communicate the medical images to the language model, we develop

Repository for the paper: Open-Ended Medical Visual Question Answering Through Prefix Tuning of Language Models (https://arxiv.org/abs/2303.05977) For questions: [email protected]

arXiv:2303.05977v1 [cs.CV] 10 Mar 2023

open-ended medical VQA is limited due to several challenges, such as finding ways to properly communicate the visual features and letting such large-scale models be employed on small

GitHub is where people build software. More than 150 million people use GitHub to discover, fork, and contribute to over 420 million projects.

tjvsonsbeek / open-ended-medical-vqa Public. Notifications You must be signed in to change notification settings; Fork 1; Star 13. Code; Issues 3; Pull requests 0; Actions ; Projects 0;

- tjvsonsbeek/open-ended-medical-vqa

- Issues · tjvsonsbeek/open-ended-medical-vqa

- Releases · tjvsonsbeek/open-ended-medical-vqa

Open-Ended Medical Visual Question Answering Through Pre x Tuning of Language Models Tom van Sonsbeek, Mohammad Mahdi Derakhshani, Ivona Najdenkoska, Cees G. M. Snoek, and

Hier sollte eine Beschreibung angezeigt werden, diese Seite lässt dies jedoch nicht zu.

Security Overview · symlons/open-ended-medical-vqa

Repository for the paper: Open-Ended Medical Visual Question Answering Through Prefix Tuning of Language Models (https://arxiv.org/abs/2303.05977) For questions: [email protected].

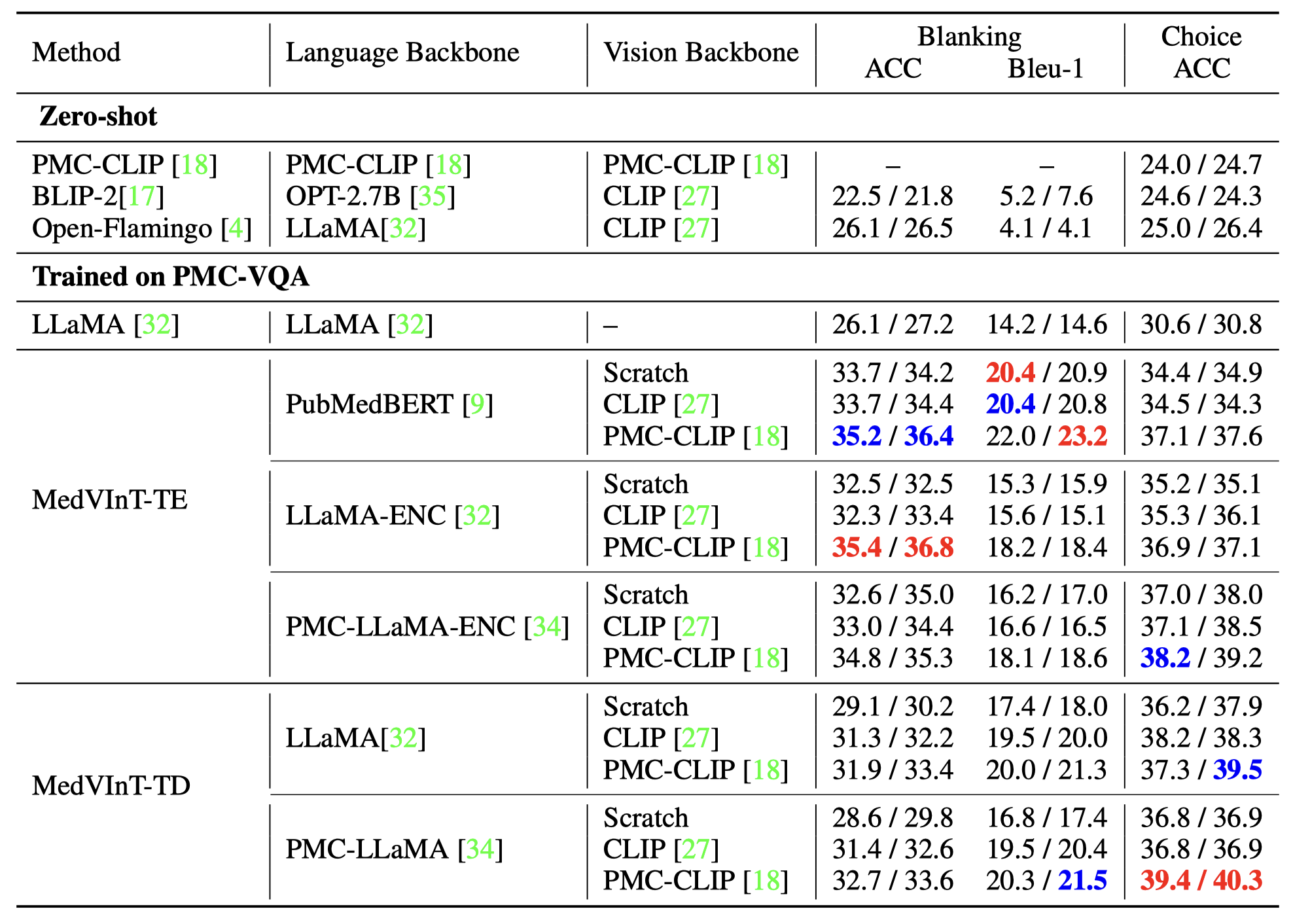

free-form, and open-ended VQA (Lin et al.,2023). Unlike traditional AI agents in medicine, often constrained to spe-cific organs or diseases, a medical VQA system should adeptly handle

Hier sollte eine Beschreibung angezeigt werden, diese Seite lässt dies jedoch nicht zu.

Open-Ended Medical VQA Through Prefix Tuning of Language Models 727 for VQA. For instance, medical VQA datasets commonly contain hundreds to thousands of free-form

open-ended medical VQA is limited due to several challenges, such as nding ways to properly communicate the visual features and letting such large-scale models be employed on small

GitHub is where people build software. More than 100 million people use GitHub to discover, fork, and contribute to over 420 million projects.

Download Citation | Open-Ended Medical Visual Question Answering Through Prefix Tuning of Language Models | Medical Visual Question Answering (VQA) is an important

Releases · tjvsonsbeek/open-ended-medical-vqa

- Security Overview · symlons/open-ended-medical-vqa

- Security Overview · tjvsonsbeek/open-ended-medical-vqa

- Collaborative Medical VQA

- Pull requests · tjvsonsbeek/open-ended-medical-vqa

- arXiv:2303.05977v2 [cs.CV] 21 Jul 2023

Find and fix vulnerabilities Codespaces. Instant dev environments

To evaluate and enhance the region-learning capabilities of Vision-and-Large-Language Models (VLLMs) in medical VQA tasks, we propose a strategy to reconstruct the

Open-Ended Medical Visual Question Answering Through Prefix Tuning of Language Models. Medical Image Computing and Computer Assisted Intervention – MICCAI 2023 , 726–736.

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community. ProTip! What’s not been updated in a

Hier sollte eine Beschreibung angezeigt werden, diese Seite lässt dies jedoch nicht zu.

set of curated answers. We focus on open-ended VQA and motivated by the recent advances in language models consider it as a generative task. Leveraging pre-trained language models, we

We focus on open-ended VQA and motivated by the recent advances in language models consider it as a generative task. Leveraging pre-trained language models, we introduce a novel

GitHub is where people build software. More than 100 million people use GitHub to discover, fork, and contribute to over 420 million projects.

Paper Info Reviews Meta-review Author Feedback Post-Rebuttal Meta-reviews Authors Tom van Sonsbeek, Mohammad Mahdi Derakhshani, Ivona Najdenkoska, Cees G. M. Snoek, Marcel

Repository for the paper: Open-Ended Medical Visual Question Answering Through Prefix Tuning of Language Models (https://arxiv.org/abs/2303.05977) – Pull requests

Medical Visual Question Answering (VQA) is an important challenge, as it would lead to faster and more accurate diagnoses and treatment decisions. Most existing methods approach it as a

Leveraging pre-trained language models, we introduce a novel method particularly suited for small, domain-specific, medical datasets. To properly communicate the

Repository for the paper: Open-Ended Medical Visual Question Answering Through Prefix Tuning of Language Models (https://arxiv.org/abs/2303.05977) – tjvsonsbeek/open-ended-medical-vqa

Leveraging pre-trained language models, we introduce a novel method particularly suited for small, domain-specific, medical datasets. To prop-erly communicate the medical

GitHub is where people build software. More than 150 million people use GitHub to discover, fork, and contribute to over 420 million projects.

Medical Visual Question Answering (VQA) is an important challenge, as it would lead to faster and more accurate diagnoses and treatment decisions. Most existing methods approach it as a

We focus on open-ended VQA and motivated by the recent advances in language models consider it as a generative task. Leveraging pre-trained language models, we introduce a novel

- Weightwatchers Diet Review: Does It Work For Weight Loss?

- Why Is Procter _ Procter And Gamble Website

- Aktueller Speiseplan :: Laremo – Gaststätte Laremo

- Dorothea Wierer News: Dorothea Wierer Schwanger

- The 25 Hottest 2024 Wedding Trends

- Experiment Geglückt: Nasa Produziert Sauerstoff Auf Dem Mars

- Hilfe- Grüner Ohrenschmalz! | Ohrenschmalz Reinigen Anleitung

- Virtuelle Selbsthilfegruppe Demenz

- „Nürnberger Ei“: Meisterwerke Der Deutschen Uhrmacher

- Sehenswert! Die Yves Saint Laurent Museen In Paris

- Mietspiegel, Mietpreise In Bruchsal Im Jahr 2024

- Reines – Welche Wortart Ist Rein

- Fahrten Zur Auswärtigen Arbeitsstelle Gelten Als Arbeitszeit