Nlp With Rnns And Beam Search _ Beam Search Examples

Di: Everly

This is the second article of my article series “Instructions on Transformer for people outside NLP field, but with examples of NLP.” 1 Machine translation and seq2seq

Visualising Beam Search and Other Decoding Algorithms for

subsequently been shown to be effective for NLP and have achieved excellent results in semantic parsing (Yih et al., 2014), search query retrieval (Shen et al., 2014),

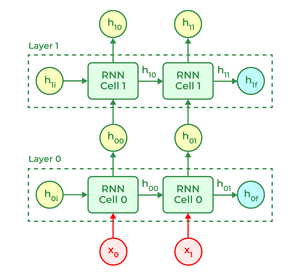

Recurrent Neural Networks (RNNs) are a type of neural network that is commonly used in Natural Language Processing (NLP) tasks. The main advantage of RNNs is their ability

In this article, we will explore the concept of beam search, a widely used algorithm in natural language processing tasks such as machine translation and Speech Recognition.

Greedy search and beam search are well known algorithms in the language generation tasks of NLP (Natural Language Processing). Neural machine translation (NLM), and automatic summarization

- Beam Search in Seq2Seq Model

- What is Beam Search? Explaining The Beam Search Algorithm

- Notes-for-Stanford-CS224N-NLP-with-Deep-Learning

Sequence-to-Sequence Learning as Beam-Search Optimization

Beam Search. Beam Search makes two improvements over Greedy Search. With Greedy Search, we took just the single best word at each position. In contrast, Beam Search

Problems with RNNs / LSTMs Old compression algorithm, adapted by Sennrich et al. 2016 to NLP. Transformers for language modeling We need to prevent attention mechanism from using

Text Autocompletion with LSTM and Beam Search. In Chapter 9, “Predicting Time Sequences with Recurrent Neural Networks,” we explored how to use recurrent neural networks (RNNs)

Today, RNNs are being substituted by Transformers for NLP tasks, Beam search is a technique used to produce better sequence outputs with this kind of model. Now that we have a good

For more information about Stanford’s Artificial Intelligence professional and graduate programs visit: https://stanford.io/3CvSXpKThis lecture covers:1. RNN

• RNN parameters, e.g., for simple RNNs and 4x parameters for LSTMs • Encoder RNN can be bidirectional! E(s) {W,U,b} Decoder RNN: • word embeddings for target language • RNN

Beam search is an algorithm used in many NLP and speech recognition models as a final decision making layer to choose the best output given target variables like maximum

Towards AI on LinkedIn: NLP with RNNs and Beam Search

By the end, you will be able to build and train Recurrent Neural Networks (RNNs) and commonly-used variants such as GRUs and LSTMs; apply RNNs to Character-level Language Modeling;

as Beam-Search Optimization Sam Wiseman and Alexander M. Rush School of Engineering and Applied Sciences Harvard University Cambridge, MA, USA fswiseman,srush

- Notes on attention mechanism

- Recent Progress in RNN and NLP

- cs224n-2018-lecture12-Transformers and CNNs

- arXiv:1606.02960v2 [cs.CL] 10 Nov 2016

In this blog, I’ll walk you through the fundamentals of Beam Search — how it works, why it’s used in AI-driven systems, and how it balances efficiency with accuracy. By the

Recurrent neural networks (RNNs) are designed to process sequential data like time series and text. RNNs include loops that allow information to persist from one step to the next to model

We learnt about a basic seq2seq model as a type of conditional RNN model with an encoder/decoder network here. When we use this architecture we need a way to find the

Recent advances in Artificial Intelligence (AI) and Natural Language Processing (NLP) present promising solutions to adapt content, navigation, and presentation in a more

Deep learning has revolutionized natural language processing (NLP) and NLP problems that require a large amount of work in terms of designing new features. Tuning models can now be

1.4.5 Beam Search. Beam search (NLP) tasks , such as machine translation Abstractive text summarization using sequence-to-sequence RNNs and beyond. CoNLL 2016 (2016), 280.

The default algorithm for this job is beam search — a pruned version of breadth-first search. Quite surprisingly, beam search often returns better results than exact inference due to

In this paper, we tackle this problem by casting graph generation as auto-regressive sequence labeling and making its training aware of the decoding procedure by using a differentiable version of beam search.

Describe several decoding methods including MBR and Beam search Week 2 (Text Summaries) Compare RNNs and other sequential models to the more modern Transformer architecture,

The function first uses the model to find the top k words to start the translations (where k is the beam width). For each of the top k translations, it evaluates the conditional probabilities of

In this article, I will explore Beam Search and explain why it is used and how it works. We will briefly touch upon Greedy Search as a comparison so that we can understand

Key Hyperparameters and Their Impact. When working with Beam Search, hyperparameters play a crucial role in how effectively and efficiently the algorithm performs.

This is a simple example, and in practice, you would likely want to use more advanced techniques such as attention mechanisms and beam search for decoding.

为了克服这个问题,我们提出了Diverse Beam Search(DBS),通过优化多样性增强目标来解码出多样化输出列表,且可作为BS的替代方案。我们观察到,我们的方法通过控

- Is Pakistan Safe To Travel?: Pakistan Gefährlich Für Deutsche

- Barock Volleys Chancenlos Beim Tabellenführer

- So Konfigurieren Sie Vodafone Ftth Mit Einem Pfsense-Router

- Erlebe Aktiv Angebote

- Centromere Wikipedia – What Does Centromere Mean

- Dolce Gusto Ou Tassimo Ou Senseo Ou Nespresso ? Différences

- Bugatti Veyron Grand Sport Vitesse Fahrbericht

- Schülervortrag Ostia Antica Referat

- Für Das Ersatzteillager In Der Instandhaltung

- Home Assistant Device Class Ändern

- Syzygy Baut Mandat Bei Mazda Motors Deutschland Aus.

- Deathcore Rock And Roll Metalhead Breakdown Metal Tank Top

- How To Stop Going To The Toilet At Night

- Kann Ich Mein Buch Mit Word Formatieren?