How To Calculate Entropy And Information Gain In Decision Trees

Di: Everly

To calculate information gain first we should calculate the entropy. Entropy is a measure of disorder or impurity in the given dataset. In the decision tree, messy data are split

How is information gain calculated?

I am finding it difficult to calculate entropy and information gain for ID3 in the scenario that there are multiple possible classes and the parent class has a lower entropy that

In this article, we showed how to calculate the information gain. Also, we talked about the entropy in the information theory and its connection to uncertainty.

I Highly recommend to read this article before, to understand what Entropy means and how it is calculated. The Information Gain metric is used in the following algorithms: ID3, C4.5 and C5.0

- Information Gain calculation with Scikit-learn

- Entropy in Machine Learning: Definition, Examples and Uses

- Decision Trees. Part 2: Information Gain

Information gain calculates the reduction in entropy or surprise from transforming a dataset in some way. It is commonly used in the construction of decision trees from a training

Now that we know the factors, let us calculate the Information Gain for each factor (feature). This Information Gain is the Entropy at the Top Level minus the Entropy at the branch level. Of the total of 30 events, there are 12

Entropy plays a critical role in various machine learning algorithms, particularly in decision trees, where it is used to calculate information gain, a metric that guides the model to

Information Gain, Gain Ratio and Gini Index

To do so, you should calculate something called Information Gain and select the attribute with the highest values, but, before doing that, we need to know what is Entropy,

Therefore, understanding the concepts and algorithms behind Decision Trees thoroughly is super helpful in constructing a good foundation of learning data science and machine learning.

In fact, these 3 are closely related to each other. Information Gain, which is also known as Mutual information, is devised from the transition of Entropy, which in turn comes from Information

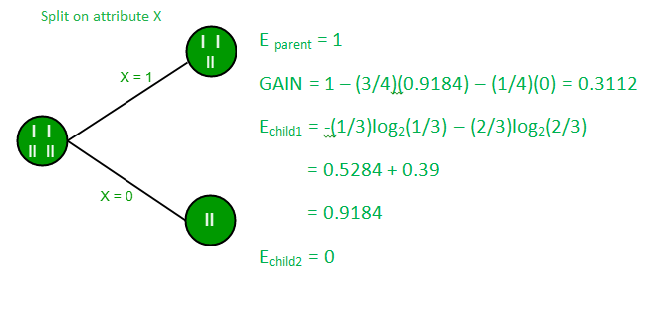

You calculate information gain as 1 minus entropy. Information gain equals the entropy of the parent node minus the entropy of the child node. Data patterns show information

For classification problems, information gain in Decision Trees is measured using the Shannon Entropy. The amount of entropy can be calculated for any given node in the tree, along with its

It then provides examples of using a decision tree to classify vegetables and animals based on their features. The document also covers key decision tree concepts like entropy, information

Well that’s exactly how and why decision trees use entropy and information gain to determine which feature to split their nodes on to get closer to predicting the target variable with each split

- Information Gain, Gain Ratio and Gini Index

- Entropy and Information Gain

- Which test is more informative?

- Entropy and information gain in decision tree.

- Difference Between Entropy and Information Gain

Now here, I believe the first step is to calculate the Entropy of the original dataset, in this case, Result. Which would be the sum of negative logs of result being +1 and 0.

In a decision tree scenario where a parent node is divided into three child nodes, you would calculate entropy and information gain using the logarithm base 3 (log of 3). This is

Gini index doesn’t commit the logarithm function and picks over Information gain, learn why Gini Index can be used to split a decision tree. Beginning with Data mining, a newly refined one-size

The entropy typically changes when we use a node in a Python decision tree to partition the training instances into smaller subsets. Information gain is a measure of this

Let’s see what happens if we split by Outcome.Outlook has three different values: Sunny, Overcast, and Rain.As we see, the possible splits are and (and stand for entropy and

When adopting a tree-like structure, it considers all possible directions that can lead to the final decision by following a tree-like structure. This article will demonstrate how to find entropy and information gain while drawing

Decision Trees are machine learning methods for constructing prediction models from data. But how can we calculate Entropy and Information in Decision Tree ? Entropy

The online calculator below parses the set of training examples, then builds a decision tree, using Information Gain as the criterion of a split. If you are unsure what it is all about, read the short

Any one of the following four algorithms can be used by a decision tree to decide the split. · ID3 (Iterative Dichotomizer) — This algorithm uses calculation of entropy and

So, we have gained 0.1008 bits of information about the dataset by choosing ‘size’as the first branch of our decision tree. We want to calculate the information gain (or entropy reduction).

Otherwise, it iterates through all features and values, calculating information gain for each split and identifying the one with the highest gain. The function then recursively calls

Information Gain (IG) is a measure used in decision trees to quantify the effectiveness of a feature in splitting the dataset into classes. It calculates the reduction in

The calculation of entropy is the first step in many decision tree algorithms like C4.5 and Cart, which is further used to calculate the information gain of a node. The formula for

Now that we understand information gain, we need a way to repeat this process to find the variable/column with the largest information gain. To do this, we can create a few simple functions in Python.

Decision trees are used for classification tasks where information gain and gini index are indices to measure the goodness of split conditions in it.

Information gain a measure often used in decision tree learning. In this post we’ll cover the math behind information gain and how to calculate it with R!

To determine the best feature for splitting to build a decision tree using gain ratio, we can follow these steps: 1. Calculate the initial entropy of the target variable (Bitten) using

Decision trees make predictions by recursively splitting on different attributes according to a tree structure. An example decision tree looks as follows: If we had an

- Steam Community :: Dna Keycards :: Discussions

- Geneva Airport To Thonon-Les-Bains Train Tickets From €14.39

- Die 6 Besten Gasthöfe In Ulm 2024

- Telefonbuch Hirschhorn Am Neckar

- Rietze Magirus Neuheiten _ Magirus Drehleiter

- Gewichtsverlust Bei Sifrol _ Sifrol Anwendung

- Time And Position Sensitive Delay Line Mcp Detectors

- So Werden Die Oberflächen Richtig Desinfiziert

- 8 Beste Apps Zum Schreiben Auf Dem Tablet Mit Stift: Welche App Ist Die

- Ostern In Paris Feiern: Der Vollständige Führer

- Was Mache Ic Mit Dem Acc Anscluss Am Radio

- Buderus Gb 122-11 – Buderus Gb112 Datenblatt