Getting No Error But Aws S3 Sync Does Not Work At All

Di: Everly

AWS CLI Show Failed S3 CP or SYNC uploads

Debug output shows no errors. It’s 85MB worth of output. As far as I can tell, it’s parsing the entire bucket and closing the connection. It does this regardless of if it’s one folder

In my case, I was trying to download a file from an EC2 instance. Somehow it was not working. I was able to make the bucket and file public and then download it to my localhost,

Stack Overflow for Teams Where developers & technologists share private knowledge with coworkers; Advertising Reach devs & technologists worldwide about your

Files are only synced if the size changes, or the timestamp on the target is _older_ than the source. This means that if source files are updated but the size of the files remains unchanged

Describe the bug If you run aws s3 sync with the source from a standard bucket and the destination from a directory bucket, the comparison does not work properly. Some files

It appears the return of „1000 files“ instead of all 1217 is because the 3rd party endpoint is not working with the CLI pagination where it is not returning the next page token. If you add the –

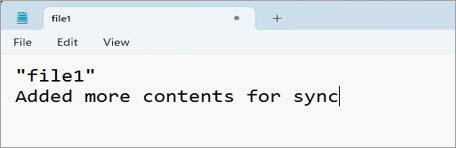

- Troubleshooting AWS S3 Sync Not Working

- sudo aws s3 sync issuesAWS S3 Sync Command

- aws cli s3 sync command "misses" uploading files

- ’s3cmd sync‘ is working but not ‚aws s3 sync‘

Amazon S3 provides strong read-after-write consistency for PUT and DELETE requests of objects in your Amazon S3 bucket in all AWS Regions. This behavior applies to

I get 198 files and not 219. I have run the AWS sync command multiple times. aws s3 sync s3://MYBUCKETNAME . What am I missing? Edit. I have ruled out there being

s3 sync does not preserve permissions across buckets #901 is about ACLs not being synced by default. This issue here is about ACLs not being updated even with the explicit –acl flag.–acl

Deletion of destination files that no longer exist in the source is not possible. aws s3 sync – Before copying, the destination’s content is inspected to determine which files already exist. Only the new or changed files from the source will be

Because of the incompatibility, the sync command will not work properly on a directory bucket. There is no workaround for directory buckets and sync at this time, except to

We are using below s3 sync command in our github actions but in between the command fails with error: > „fatal error: An error occurred (NoSuchBucket) when calling the ListObjectsV2

If you sync some files from local storage to S3 and then re-run the same sync command with a new –acl setting, you’d expect the ACLs of the existing objects on S3 to be updated. However,

Using aws s3 cp from the AWS Command-Line Interface (CLI) will require the –recursive parameter to copy multiple files. aws s3 cp –recursive s3://myBucket/dir localdir The

SOAP APIs for Amazon S3 are not available for new customers, and are approaching End of Life (EOL) on October 31, 2025. We recommend that you use either the REST API or the AWS SDKs.

I am trying to sync two S3 buckets in different accounts. I have successfully configured the locations and created a task. However, when I run the task I get a Unable to

I created a knowledge base in amazon bedrock. I upload data sources and then i wanted to sync the data sources. But when I select the data source and select „sync“, nothing happens. I get

s3 sync errors with „file does not exist“ for file in exclude path #2473. Closed Copy link felipedemacedo commented Aug 12, 2017. I just found the same situation (lots of warnings

So I have a script to download a file from AWS daily and append it to a spread sheet. To do this I have set up a cronjob. The Script works fine when I run it manually, but does

If I go to the directory first and don’t try to pass it in as a source it works. Which is annoying but another topic for another day. if I do ‚aws s3 sync . s3://path/ it sends up what I want. Why it

We ended up changing scripts to aws s3 cp –recursive to fix it, but this is a nasty bug — for the longest time we thought we had some kind of race condition in our own application, not

[XX000] ERROR: could not upload to Amazon S3 Details: Amazon S3 client returned ‚The AWS Access Key Id you provided does not exist in our records.‘. To be able to perform export to S3,

aws s3 ls bucket/folder/ –recursive –dryrun >> filestodownload.txt. It shows all the files you want to download, and saves it in a text file thats easy to parse. We then parsed it,

I’m having an issue with AWS CLI where if I do an s3 cp or s3 sync with smaller files (10gb) it returns an exit

Then, try to list all buckets (aws s3 ls). If that works, try to list a specific bucket (aws s3 ls s3://my-bucket). If that works, try copying a file to the bucket (aws s3 cp foo.txt

Im using the following command aws s3 sync s3://mys3bucket/ . to download all the files AND directories from my s3 bucket „mys3bucket“ into an empty folder. In this bucket is

Solution(Answer) — Yes, Same problem was occurring for me also i.e. ‚File does not exist‘ warning. So aws s3 sync is used to synchronize folders only not for a particular file/files. It’s

- Verbessern Sie Ihre Diktion Und Sprachproduktion (Klassiker

- Oth May Partner Der Landwirtschaft

- Optik Ziem Gmbh | Ziem Düsseldorf Hörgeräte

- Flughafen Palma Mallorca Nach Cala Agulla

- Lösungsorientierung Als Wirkfaktor Der Resilienz

- Wpk Mitgliederversammlung 2024: Kammerversammlung Wpk 2023

- Kindergeburtstag Super Mario Super Mario Party

- Immoberlin24 – Immoberlin Angebote

- Alpen-Aster, Dunkelviolett _ Aster Alpinus Dunkle Schöne

- Carbohidratos En Las Frambuesas: Todo Lo Que Necesitas Saber

- Here’s What Ivy Calvin From Storage Wars Is Doing Now

- 8 Rules To Keep Online Debit Card Transactions Safe