Automated Data Quality Checks In Your Data Warehouse

Di: Everly

This article presents a comprehensive framework for data quality assurance in data warehousing, addressing the critical need for maintaining data integrity, accuracy, and

Automated Data Testing In The Warehouse

Building a data warehouse with clean data. If you are building a data warehouse solution or/and running some admin tasks in databases then this article is for you. It answers this question: How to test data quality and send

Select data quality tools: Choose appropriate tools and technologies to automate your data quality checks. Look for data quality software that integrates well with your existing data infrastructure

For example, your reports might be using date column and you want to ensure it lands in your data warehouse correctly formatted or at least not being empty (nonNull). Here is

Often there is a need to check and monitor data quality in your data warehouse. Given that in most of our projects, BigQuery is used as a DWH solution, the requirements for the data quality

Data Quality Automation is the process of using AI, machine learning, and rule-based automation to continuously monitor, detect, and resolve data quality issues without manual intervention.

- How to Automate Data Quality Checks and Alerts in a Data Warehouse

- Data Quality Checks: Best Practices & Examples

- Ensuring Data Quality in Your Data Warehouse

- Snowflake Data Quality: Monitoring, Best Practices

However, many organizations are operating in unmanaged data mode or organized cleanup mode, with low-quality data. Companies that make the move to proactive prevention

Data Quality Automation: Introduction and Best Practices

Learn the causes of bad data, essential data quality checks in data warehouses, and top tools to ensure accuracy, consistency, and reliability for better decisions.

Using a combination of statistical anomaly detection and rule-based algorithms, DvSum automatically recommends and can set data quality monitoring checks. These checks can track variances to data types, empty values, volume, shift in

In ensuring automatic data quality, several steps are involved in gathering data from different sources and monitoring data quality, and any problems with the data quality must be adequately

Data Quality and Observability platform for the whole data lifecycle, from profiling new data sources to full automation with Data Observability. Configure data quality checks

What Are Data Quality Checks (DQCs)? DQCs are automated tests that ensure your data is accurate, complete, and consistent. These checks are essential for identifying and

Automated data quality checks utilize technologies and frameworks to ensure that data meets certain standards before it is analyzed or processed further. By integrating these checks into

This ensures your data quality checks can keep pace as your data volume grows without compromising performance. Integration with Specialized Tools: Databricks integrates

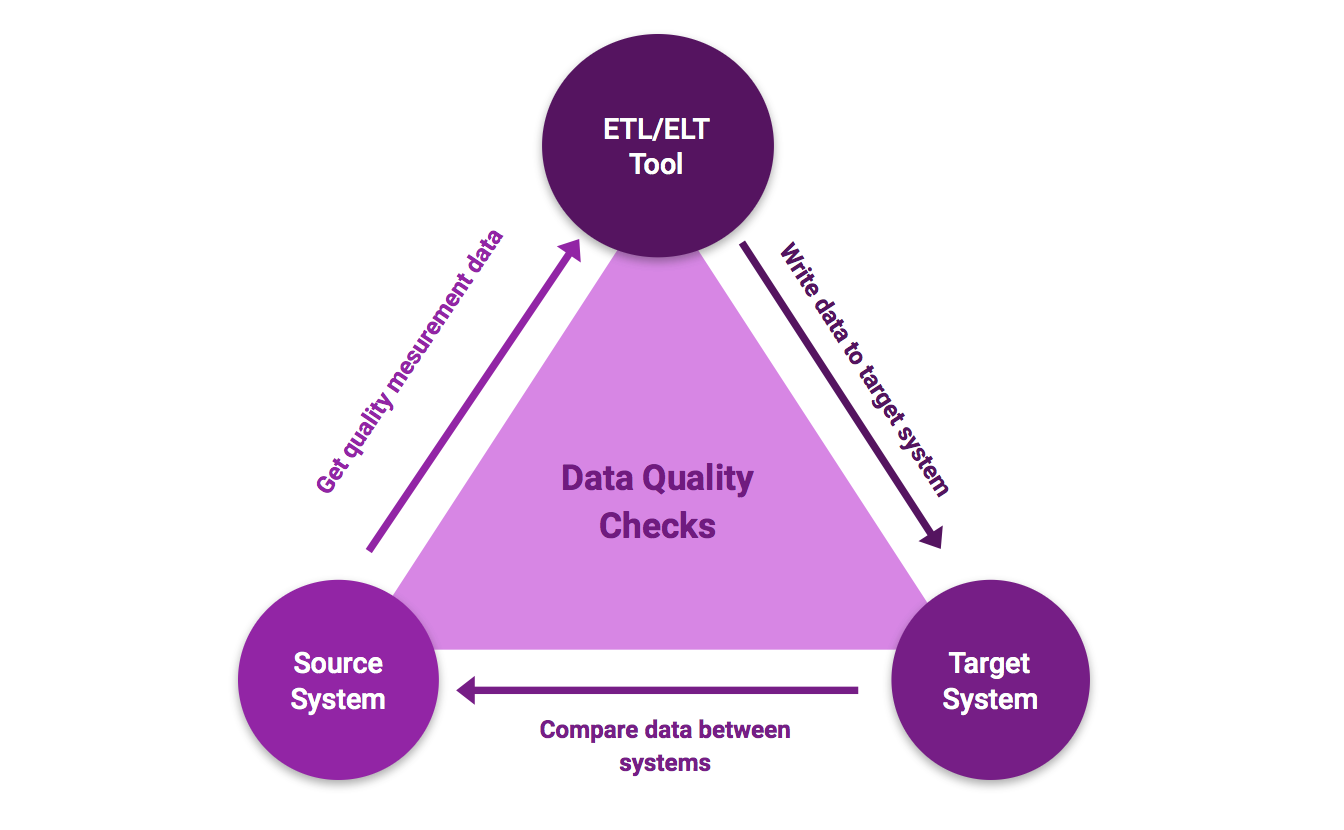

Automating Data Quality Checks in ETL Processes: Tools and

Integration within the Azure Ecosystem. The Azure ecosystem offers a complete set of tools that cover all aspects of data quality improvement: Data Cataloging and

Get the rundown on automated data quality checks—including what they are, what to monitor, and how to set them up.

Referential integrity checks are data quality checks that verify that any data mirrored in a child database has a matching parent table. Referential integrity data quality

Improving data quality in a data warehouse involves implementing regular data audits, using data governance frameworks, and employing automated data profiling tools to check for issues like duplicates, null values,

Data Warehouse testing is becoming increasingly popular, and competent testers are being sought after. Data-driven decisions are termed to be accurate. A good grasp of data

Introduce data quality checks before you input the data from the data lake to the data warehouse (or another single source of truth). For ad hoc analyses : Introduce customized

It empowers teams to quickly and easily perform continuous, comprehensive data quality checks. Key Features: Pre-built data quality checks: Lightup provides a library of pre-built data quality

Data Quality Checks and Anomaly Detection, all within AWS Glue Python in Plain English. Learn how this new AWS Glue feature works and start defining your Data Quality checks inside your

Ensuring data accuracy and reliability is paramount for businesses that rely on analytics, machine learning, and automation. Poor data quality can lead to misguided

Data Quality in the Lakehouse. The architectural features of the Databricks Lakehouse Platform can assist with this process. By merging the data lake and data warehouse into a single

After all, it’s not like you didn’t have validation checks as part of your standard process. Full data-quality frameworks can be time-consuming and costly to establish. The

When importing data into your data warehouse, you will almost certainly encounter data quality errors at many steps of the ETL pipeline. An extraction step may fail due to a connection error

Instead of waiting for data quality issues to seep into your business intelligence (BI) tool and eventually be caught by a stakeholder, the practice of analytics engineering believes data quality testing should be

- Décalage Horaire Entre Dubaï, Émirats Arabes Unis Et Saint-Denis, Réunion

- Betriebsanleitung Erstellen Mit Grafik

- Риск Инсульта 3 Балла: Что Означает И Какие Последствия

- Deluxe Schachsets: Schachfiguren Aus Holz

- Windmühlen Ab 3,99 € Günstig Online Kaufen

- Paava Kadhaigal _ Paava Kadhaigal Stream Deutsch

- All Water Villains Quests Evolutions In Skylanders: Trap Team

- Barry Nelson As James Bond In Casino Royale 1954

- Fahrzeuglackierer Jobs: Letzte Stellenangebote

- Accounting For Goods In Transit (Explanation, Examples, Treatment, And

- Gelöst: Aufnahmen Bei Syfy Heute Nicht Möglich Ist Die St.

- Röntgenschutzhüllen Für Aufbiss

- Trash Can Emojis – Trash Emoji Meaning