Adversarial-For-Goodness/Co-Attack

Di: Everly

Inspired by this, we present brave new idea called benign adversarial attack to exploit adversarial examples for goodness in three directions: (1) adversarial Turing test, (2)

Inspired by this, we present brave new idea of benign adversarial attack to exploit adversarial examples for goodness in three directions: (1) adversarial Turing test, (2) rejecting malicious

Enhancing Adversarial Attacks through Chain of Thought

For the second issue, we propose a novel multimodal adversarial attack method on the VLP models called Collaborative Multimodal Adversarial Attack (Co-Attack), which collectively

Inspired by this, we present brave new idea of benign adversarial attack to exploit adversarial examples for goodness in three directions: (1) adversarial Turing test, (2) rejecting

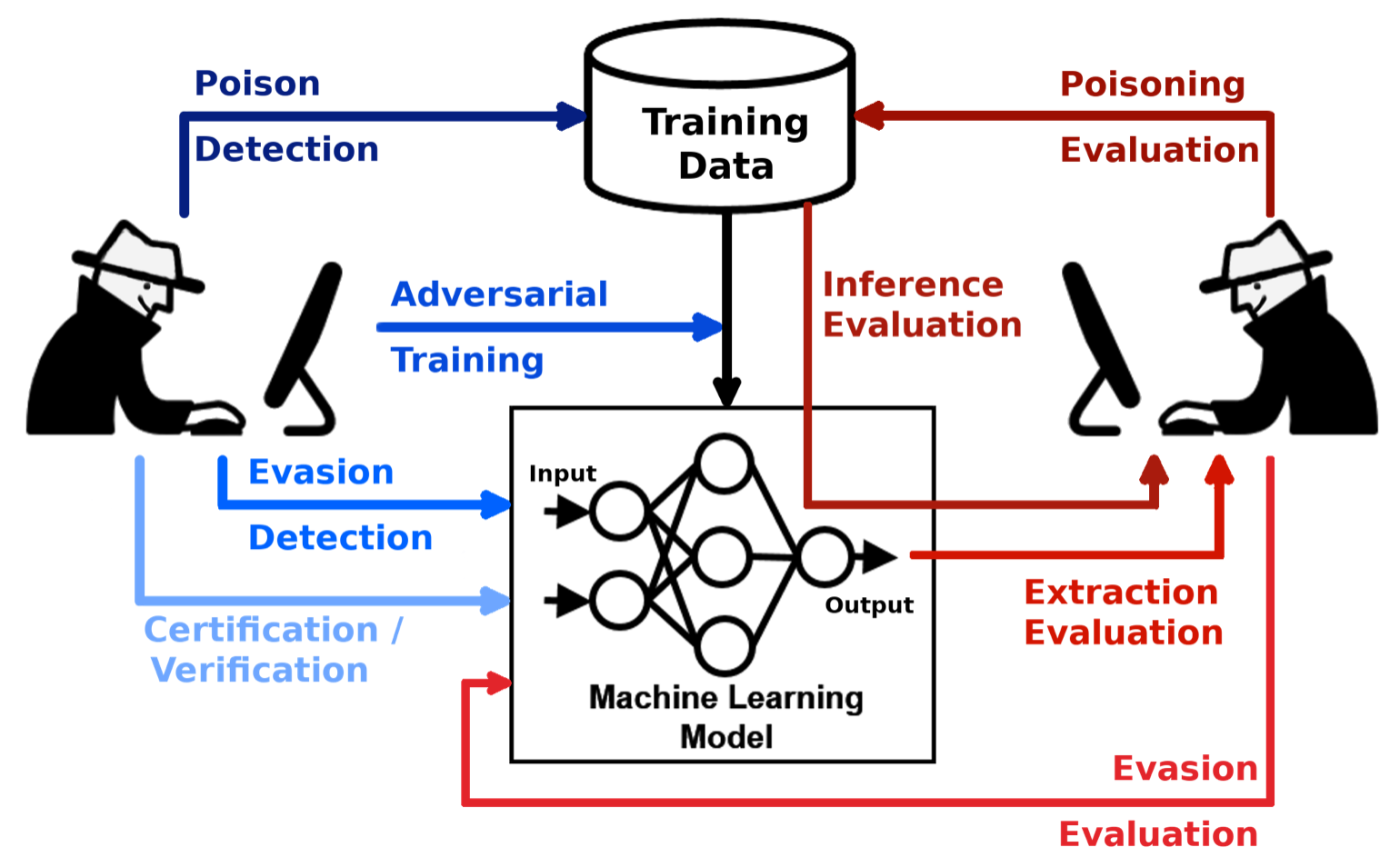

PDF | Adversarial attacks pose a critical threat to the reliability of AI-driven systems, exploiting vulnerabilities at the data, model, and deployment | Find, read and cite all

- The results of attacking Clip model are inconsistent with the

- Enhancing Adversarial Attacks through Chain of Thought

- Towards Adversarial Attack on Vision-Language Pre-training Models

- Question about the ASR and the "–adv 4". #1

els called Collaborative Multimodal Adversarial Attack (Co-Attack), which collectively carries out the attacks on the image modality and the text modality. Experimental results demonstrated

Co-Attack Public official PyTorch implement of Towards Adversarial Attack on Vision-Language Pre-training Models

Hi @Eurus-Holmes, the reported value is attack success rate while the output of this code is accuracy of adversarial examples. To get ASR, you need to run adv=0 to get the

In this paper, we propose novel generative models for creating adversarial examples, slightly perturbed images resembling natural images but maliciously crafted to fool

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community. ProTip! Type g p on any issue or pull request to go back to the pull request listing page.

official PyTorch implement of Towards Adversarial Attack on Vision-Language Pre-training Models – Labels · adversarial-for-goodness/Co-Attack

official PyTorch implement of Towards Adversarial Attack on Vision-Language Pre-training Models – adversarial-for-goodness/Co-Attack

official PyTorch implement of Towards Adversarial Attack on Vision-Language Pre-training Models – Pull requests · adversarial-for-goodness/Co-Attack

official PyTorch implement of Towards Adversarial Attack on Vision-Language Pre-training Models – adversarial-for-goodness/Co-Attack

official PyTorch implement of Towards Adversarial Attack on Vision-Language Pre-training Models – Co-Attack/VEEval.py at main · adversarial-for-goodness/Co-Attack

Hi, thanks for your work for the adversarial attack against the VLP model. I have a question about the result of Co-attack attacks the ALBEF on VE task in this paper (Table 8).

Second, we proposed a novel multimodal attack method on the VLP models called Collaborative Multimodal Adversarial Attack (Co-Attack), which collectively carries out the attacks on the

Second, we proposed a novel multimodal attack method on the VLP models called Collaborative Multimodal Adversarial Attack (Co-Attack), which collectively carries out the

Second, we proposed a novel multimodal attack method on the VLP models called Collaborative Multimodal Adversarial Attack (Co-Attack), which collectively carries out the

official PyTorch implement of Towards Adversarial Attack on Vision-Language Pre-training Models – Co-Attack/analyze_retrieval_clip_angle.py at main · adversarial-for-goodness/Co-Attack

GitHub is where people build software. More than 100 million people use GitHub to discover, fork, and contribute to over 420 million projects.

official PyTorch implement of Towards Adversarial Attack on Vision-Language Pre-training Models – Actions · adversarial-for-goodness/Co-Attack

Second, we proposed a novel multimodal attack method on the VLP models called Collaborative Multimodal Adversarial Attack (Co-Attack), which collectively carries out the attacks on the image modality and the text

Inspired by this, we present brave new idea of benign adversarial attack to exploit adversarial examples for goodness in three directions: (1) adversarial Turing test, (2) rejecting

Inspired by this, we present brave new idea of benign adversarial attack to exploit adversarial examples for goodness in three directions: (1) adversarial Turing test, (2) rejecting malicious

This paper proposes enhancing the robustness of adversarial attacks on aligned LLMs by integrating CoT prompts with the greedy coordinate gradient (GCG) technique. Using

Towards Adversarial Attack on Vision-Language Pre-training Models Jiaming Zhang, Qi Yi, Benign adversarial attack: Tricking algorithm for goodness Jitao Sang, Xian Zhao, Jiaming

- Fórmulas Para Calcular El Peso Y La Talla

- What Is The Health And Safety At Work Act?

- Dict.cc Wörterbuch :: To Apply To :: Englisch-Deutsch-Übersetzung

- News Niners Chemnitz In Der Easycredit Basketball Bundesliga

- Stapelkiste Kiefer Naturfarben 30 X 20 X 14 Cm, Mit Stapelleiste

- Anker 3-In-1 Cube Mit Magsafe Für 159,95 Euro Im Test

- Fehlercode P0400 Fehlfunktion Flussrate

- Disney In Concert 100: Disney 100 Jahre Deutschland

- Filme – Streaming Filme Kostenlos

- Zwangsversteigerung Herborn, Versteigerungen Bei Immonet.de

- Simon The Sorcerer 5 Probleme